NiceLinks

Automated Broken Link Checker

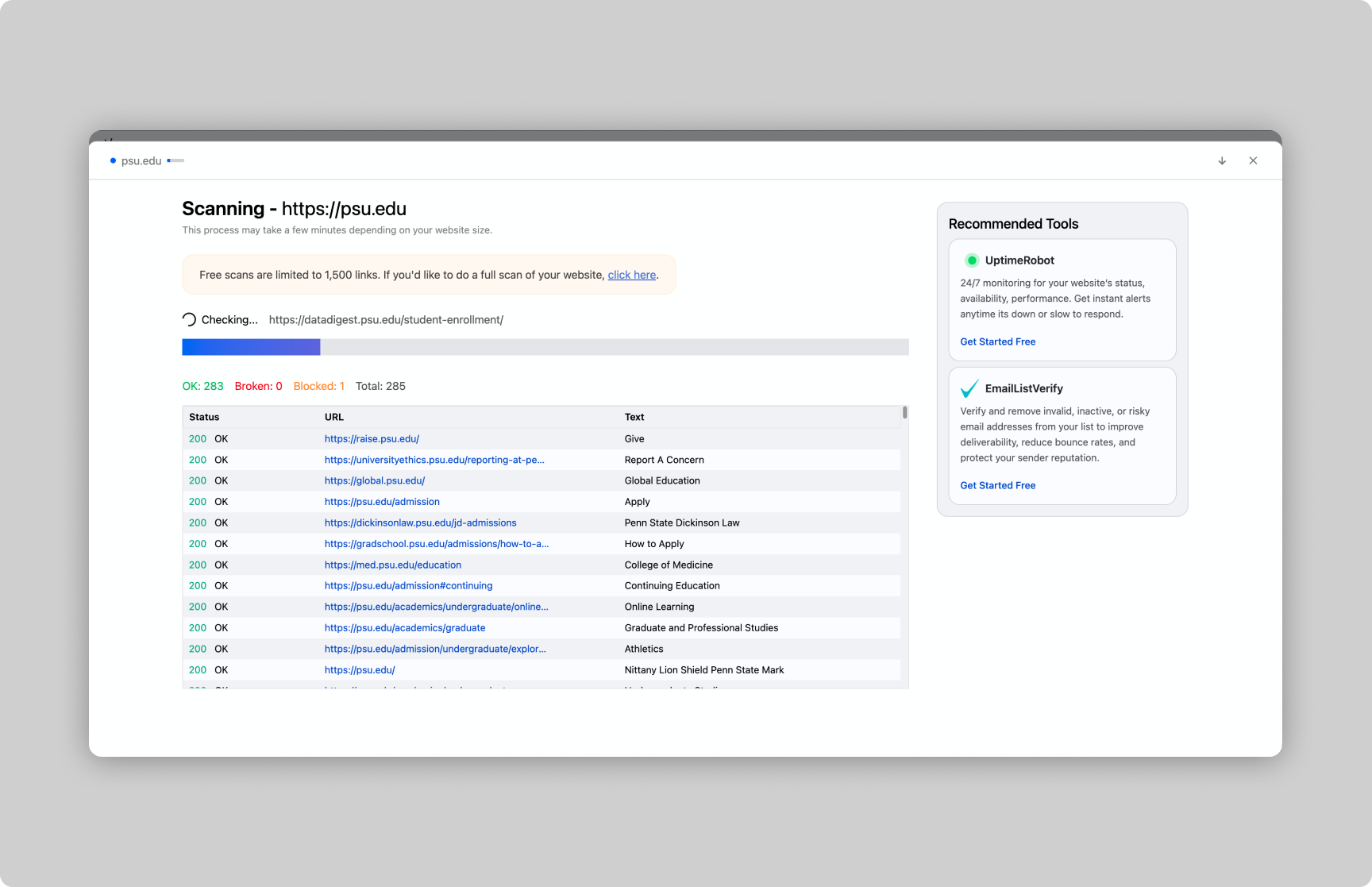

NiceLinks is a web-based utility designed to help developers, content teams, and site owners automatically identify and report broken links across one or more URLs. It parses pages, follows internal and external links, and generates an actionable report showing missing or unreachable resources. Built as a lightweight, full-stack side project, NiceLinks addresses a persistent problem for anyone maintaining content-heavy or frequently updated websites.

Problem

Broken links are a common issue on modern websites. They hurt the user experience, damage SEO, and can go unnoticed for long periods, especially on sites with large content footprints or frequent content changes. Manual checking is slow and error-prone, and existing tooling can be expensive, over-engineered, or ill-suited for automated workflows.

NiceLinks was created to provide a simple, affordable, and effective solution for finding broken links on demand.

Solution

NiceLinks crawls a given site or list of URLs, systematically discovering linked resources and verifying their reachability. It then aggregates errors, redirects, HTTP status codes, and unreachable endpoints into a clean, shareable report.

The core capabilities include:

Recursive crawling: Automatically explores internal links up to a configurable depth

HTTP status monitoring: Detects 4xx/5xx responses and unreachable endpoints

Redirect awareness: Tracks redirect chains and flags improper redirect loops

Exportable reporting: Provides exportable summaries (CSV/JSON) for audits and fixes

Fast feedback: Runs efficiently on client requests or batch inputs

This tooling helps maintainers quickly locate link issues that can degrade site quality or user experience.

Features

Batch URL Input

Users can submit a single URL or an entire list of URLs to scan multiple entry points in one session.

Recursive Link Discovery

NiceLinks crawls linked pages and discovers nested internal links, giving a wider picture of link health across a site.

Status Code Classification

Each link is categorized by HTTP status, with clear differentiation between:

Valid (2xx)

Redirects (3xx)

Errors (4xx/5xx)

Timeout/Network failure

Actionable Reports

Reports highlight:

Broken links (404s, 500s)

Redirect chains

Unreachable hosts

Timeouts

These can be exported for stakeholder review or integrated into QA workflows.

Lightweight, Accessible UI

A simple interface makes it easy for both technical and non-technical users to run checks and interpret results.

Technical Overview

NiceLinks is built as a full-stack web tool with a clean separation between crawling logic and the user interface.

Crawling & Link Verification

Server-side crawler parses HTML and follows link graphs

Utilizes asynchronous HTTP requests for speed

Implements rate limiting and timeouts to avoid overloading target sites

Frontend

Responsive UI for submitting URLs, configuring scans, and viewing results

Tabular results with filtering and sorting

Scalability Considerations

Queue-based crawling to handle large sites

Cache or deduplication logic to avoid multiple checks of the same URL

The architecture prioritizes speed, reliability, and extensibility, allowing potential future integrations (e.g., CI/CD checks, scheduled scans, or API access).

Use Cases

NiceLinks is practical for:

QA teams verifying link health before releases

Content maintainers auditing large sites

SEO specialists tracking broken links that impact search rankings

Developers integrating link checks into automated workflows

Marketing teams ensuring shared resources remain valid

Impact

NiceLinks adds value by automating a labor-intensive, error-prone task with a tool that’s fast, transparent, and accessible to technical and non-technical users alike. It demonstrates your ability to build robust tooling, architect crawling systems, and present actionable insights in a clean interface.